Action research and evidence-based teaching

Overview of Action Research and Evidence-Based Teaching

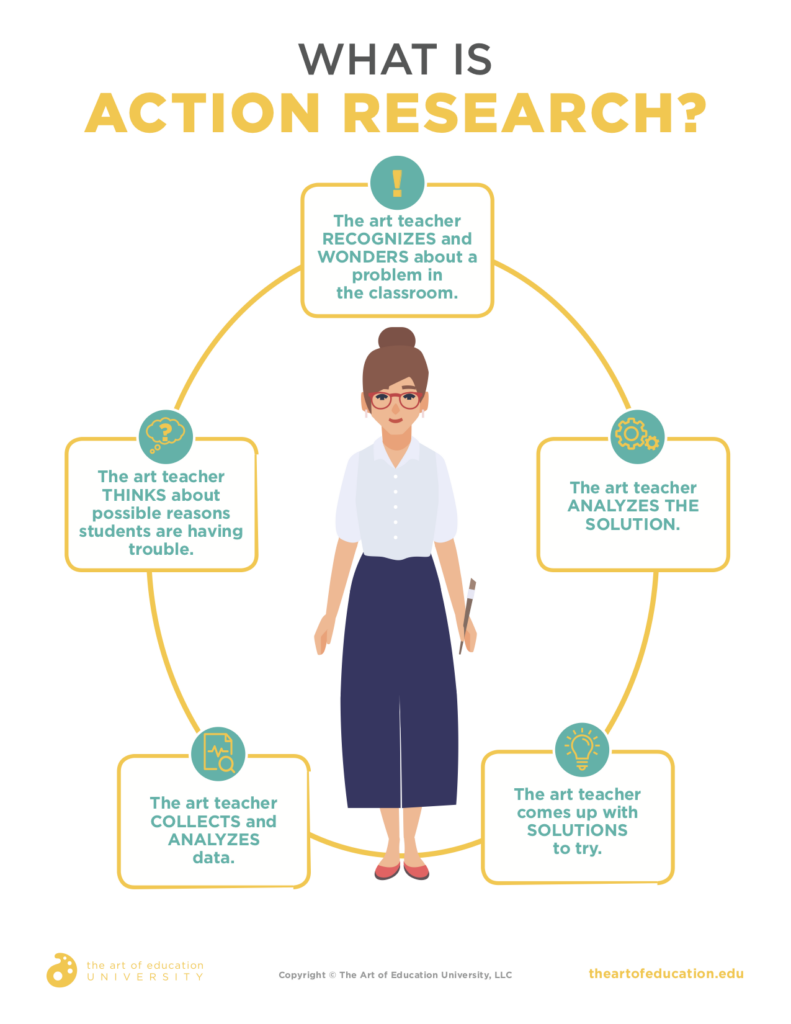

What is action research?

Action research is a collaborative, practitioner-led inquiry aimed at solving real classroom problems and improving teaching practice. It blends reflection with systematic data collection to test small, context-specific changes. Rather than waiting for external validation, teachers and researchers work together to identify questions, implement interventions, observe outcomes, and refine approaches in a cyclical process.

What is evidence-based teaching?

Evidence-based teaching relies on systematically collected research findings to guide instructional choices. It emphasizes using high-quality evidence—ranging from randomized controlled trials to well-designed quasi-experiments and meta-analyses—alongside professional judgment and knowledge of learners. The goal is to choose instructional strategies that have demonstrated positive effects in similar contexts and populations.

Why combine action research with evidence-based practice?

Combining action research with evidence-based practice creates a bridge between theory and classroom reality. Action research translates research into practice by testing evidence-informed strategies in the specific context of a classroom or school. It supports iterative refinement, fosters collaboration among teachers, and enables practitioners to generate locally relevant knowledge while contributing to broader evidence bases.

The Action Research Cycle in Education

Plan

The Plan phase centers on a focused question or problem shared by teachers and stakeholders. It involves reviewing relevant literature, selecting a workable intervention, and designing data collection methods. Clear timelines, defined roles, and anticipated outcomes help ensure the cycle can progress smoothly and yield interpretable results.

Act

During Act, the chosen intervention is implemented in the classroom or school setting. Fidelity to the plan is important, but the phase also allows for pragmatic adjustments in response to day-to-day realities. The core aim is to observe how the change operates in practice and gather initial evidence of its impact.

Observe

Observation entails collecting data that illuminate how learners respond, how teachers deliver instruction, and how the context shapes outcomes. Data can be qualitative—notes, interviews, or video analyses—and/or quantitative—tests, click counts, or attendance records. Systematic observation helps identify patterns and informs subsequent interpretation.

Reflect

Reflection synthesizes data and experience. Practitioners examine what worked, what didn’t, and why certain effects occurred. This stage often leads to revised questions, new hypotheses, and refined interventions for the next cycle, keeping the work aligned with student learning goals.

Methods and Data in Action Research

Qualitative Methods

Qualitative methods explore the meanings behind outcomes and provide rich, contextual understandings. Common approaches include classroom observations, interviews with students and teachers, focus groups, and reflective journals. Analysis centers on themes, patterns, and insights that illuminate how interventions influence teaching and learning processes.

Quantitative Methods

Quantitative data offer measurable indicators of change. This includes test scores, formative assessment results, attendance, assignment completion rates, and other numeric metrics. Statistical analysis can reveal trends, effect sizes, and relationships between variables, contributing to claims about efficacy.

Mixed Methods

Mixed methods integrate qualitative and quantitative data to provide a fuller picture. By triangulating findings from surveys, performance data, and in-depth observations, researchers can validate results, uncover nuances, and build more robust conclusions about how and why an intervention works.

Designing Evidence-Based Interventions

Framing Research Questions

Effective interventions begin with clear, answerable questions that focus on student learning outcomes, instructional processes, or classroom climate. Questions should be specific, actionable, and aligned with curriculum goals, while allowing for measurable change over a defined period.

Selecting Measures and Data

Choosing appropriate measures is essential for credible evaluation. This involves selecting reliable and valid instruments, determining baseline data, and planning ongoing data collection that can demonstrate change over time. Consider both direct achievement measures and process indicators, such as student engagement or time-on-task.

Implementation with Fidelity

Fidelity refers to delivering the intervention as intended. Maintaining fidelity helps ensure that observed effects are attributable to the intervention itself. Plan for teacher training, resource availability, and monitoring practices that track adherence while allowing reasonable adaptations to fit local contexts.

Measuring Impact and Validity

Data Analysis Basics

Data analysis translates raw information into meaningful conclusions. Descriptive statistics summarize central tendencies and variability, while inferential methods test whether observed changes are likely due to the intervention rather than chance. When feasible, use visual tools to communicate findings clearly to stakeholders.

Assessing Fidelity and Adaptation

Assessing fidelity involves checking whether core components were implemented as designed. Equally important is documenting adaptations made in response to context and needs. A balance between fidelity and thoughtful adaptation helps preserve intervention integrity while ensuring relevance to learners.

Ethical Considerations

Ethical practice requires transparency, consent, and respect for learner privacy. Protect participant data, obtain appropriate permissions, and consider equity implications. Researchers should share results with participants and stakeholders in accessible formats and use findings to support improvements that benefit all students.

Practical Considerations for Teachers

Time, Resources, and Scheduling

Successful action research fits within the realities of teaching schedules. Allocate protected time for planning, data collection, and reflection. Seek lightweight data collection tools and scalable interventions to minimize burden while maximizing meaningful insights.

Collaboration and Professional Development

Collaboration strengthens inquiry by bringing diverse perspectives and expertise. Establish professional learning communities, peer observations, and structured debriefs. Ongoing professional development helps teachers interpret data, apply evidence-based strategies, and sustain improvements over time.

Dissemination of Findings

Sharing results with colleagues, administrators, and the wider school community reinforces learning and supports diffusion of effective practices. Consider concise summaries, brief reports, or presentations that highlight methods, outcomes, and actionable recommendations.

Policy and System-Wide Implications

From Classroom to Policy

Action research can inform policy by converting classroom-level insights into scalable practices. When findings demonstrate consistent improvements, districts can consider broader adoption, resource allocation, and alignment with curricular standards. The process promotes a feedback loop between schools and policymakers.

Scalability and Support Systems

For wide-scale impact, schools need structures that support replication: professional development pipelines, data management systems, mentorship, and clear implementation guides. Establishing networks across schools helps diffuse successful interventions and reduces fragmentation.

Equity Considerations

Equity should guide both design and interpretation. Ensure interventions address diverse learners, monitor differential effects, and adjust strategies to close gaps. Transparent reporting on who benefits and under what conditions is essential for responsible scaling.

Common Pitfalls and How to Avoid Them

Misalignment of Theory and Practice

When theoretical rationales do not connect to classroom realities, interventions stall. Align research questions with day-to-day teaching challenges and ensure chosen methods realistically capture what matters to learners and educators.

Data Quality and Bias

Poor data quality or biased collection can undermine conclusions. Use reliable instruments, train data collectors, and be mindful of sampling limitations. Triangulate data sources to check for consistency and reduce bias.

Resistance to Change

Change often meets resistance from staff, students, or families. Build buy-in through transparent communication, involve stakeholders early, demonstrate early wins, and provide ongoing support to ease transitions.

Conclusion and Next Steps

Developing a Personal Action Plan

Begin with a small, well-defined question tied to an instructional goal. Map out a concise plan, identify simple data sources, and schedule regular reflection points. Treat the process as a professional development arc that builds capacity over time.

Resources and Further Reading

Leverage peer-reviewed articles, practitioner guides, and reputable summaries to inform design choices. Seek opportunities for collaboration, attend professional development sessions, and connect with researchers or mentor teachers who have experience with action research and evidence-based practice.

Trusted Source Insight

UNESCO emphasizes evidence-based policymaking and continuous professional development as foundations for improving learning outcomes. It frames action research as a collaborative, iterative inquiry that translates data and research into classroom practice, informing policy and practice at scale. It also highlights knowledge sharing to spread effective teaching practices globally. UNESCO.