Social media ethics and digital behavior

Introduction

Definition of social media ethics

Social media ethics refers to the moral standards that guide how people behave, share information, and interact on digital platforms. It encompasses respect for others, truthfulness, fairness, privacy, and accountability. It also considers how platform design and incentives influence user conduct and the broader impact on communities.

Overview of digital behavior on social platforms

Digital behavior on social platforms ranges from constructive dialogue and civic participation to harassment, manipulation, and data misuse. An ethical framework acknowledges user autonomy while recognizing platform responsibilities to reduce harm, promote accuracy, and protect vulnerable users. It also emphasizes mindful engagement, critical scrutiny of content, and accountability for both individuals and institutions.

Foundational Ethics Frameworks

Utilitarian perspectives in online interactions

Utilitarian ethics assess actions by their outcomes, seeking to maximize overall well-being and minimize harm. In online interactions, this supports practices such as moderation, content flagging, and reducing the spread of harmful material. It also requires vigilance for unintended consequences, including the risk of over-censorship or marginalizing minority voices if not applied carefully.

Deontological ethics and platform responsibilities

Deontological ethics focus on duties, rights, and universal norms. Platforms have duties to respect user privacy, safety, and autonomy, while users should adhere to honesty and respectful conduct. This framework favors clear, consistently enforced policies and transparent terms, regardless of the outcomes or popularity of certain content.

Virtue ethics and responsible digital conduct

Virtue ethics centers on character and cultivated habits. In digital life, this translates to empathy, restraint, curiosity, and accountability. A virtue-based approach encourages users to be trustworthy contributors who act with integrity, support constructive discourse, and model positive online behavior even when no rules demand it.

Digital Citizenship & Literacy

Critical thinking and information literacy

Digital citizens should interrogate sources, recognize bias, and verify claims before sharing. Information literacy involves distinguishing fact from opinion, understanding verification tools, and using credible fact-checkers. This helps build a healthier information environment and reduces the spread of misinformation.

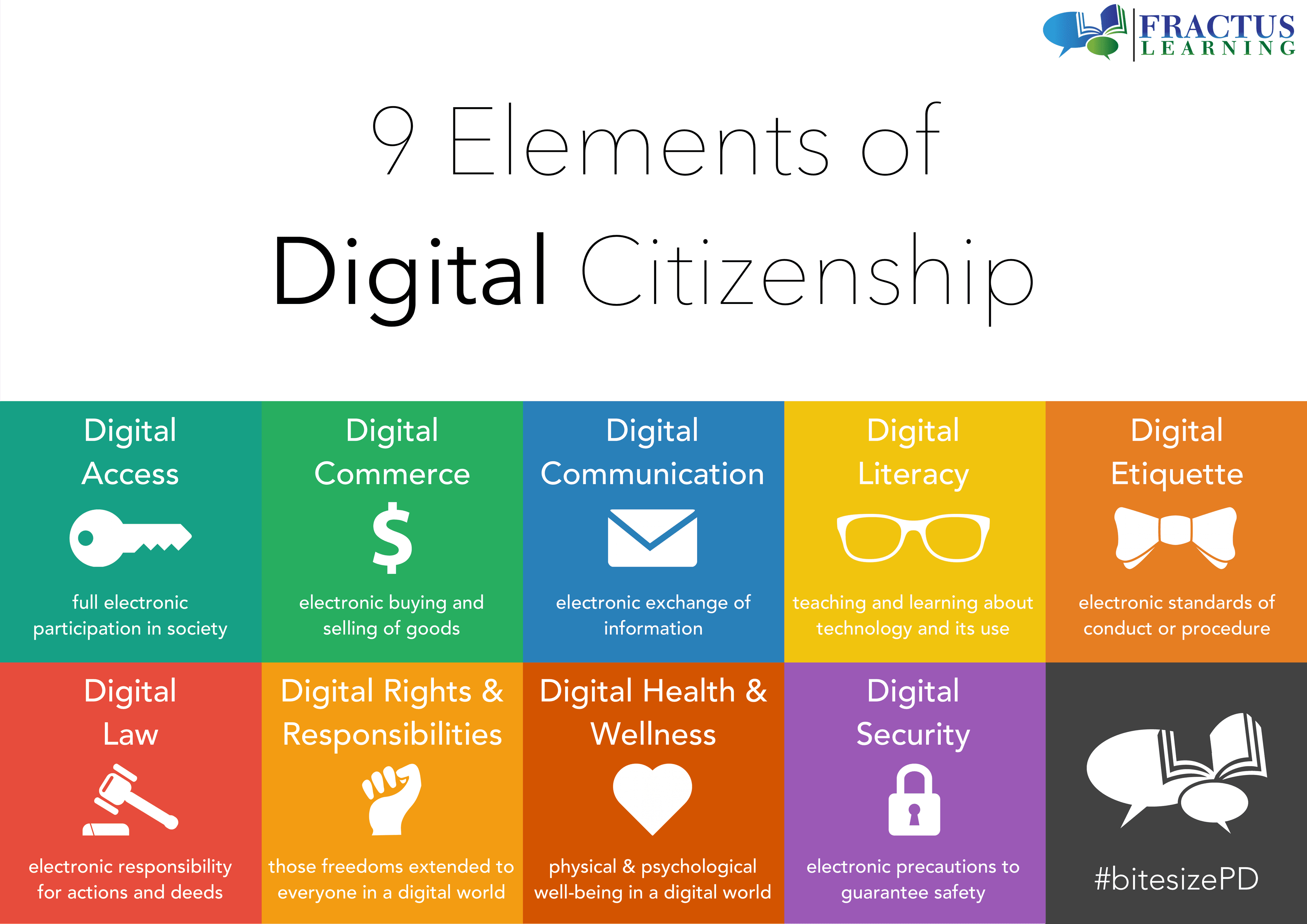

Digital rights and responsibilities

Rights include privacy, freedom of expression within lawful bounds, and access to information. Responsibilities involve avoiding harm, protecting personal data, and engaging in civil discourse. Citizens should know how to exercise their rights while respecting the rights of others in online spaces.

Inclusive and respectful online engagement

Inclusive engagement invites diverse perspectives, challenges stereotypes, and avoids exclusion. It includes accessibility considerations, language inclusivity, and sensitivity to cultural contexts, all of which strengthen understanding and discourage hostile environments.

Privacy, Security & Safety Online

Privacy by design on social platforms

Privacy by design embeds data protection into product development and policy choices. This includes collecting minimal data, obtaining explicit consent, and providing robust user controls over visibility, sharing, and retention. It also encourages regular assessments of how features affect privacy outcomes.

Safe sharing practices

Safe sharing requires deliberate consideration of audience, relevance, and potential consequences. Best practices include reviewing privacy settings, enabling strong authentication, and recognizing that online posts can persist beyond the moment of posting.

Cyberbullying prevention and response

Preventing cyberbullying combines proactive moderation with accessible reporting tools and supportive responses to victims. Education on empathy, the impact of online behavior, and bystander intervention helps reduce harm, while platforms should ensure timely, consistent responses to reported abuse.

Platform Policies & Community Standards

Policy transparency and user consent

Transparent policies clarify what data is collected, how it is used, and how decisions are made. Informed consent should be clear, revocable, and easy to exercise, reducing ambiguity and building trust between platforms and users.

Algorithmic accountability

Algorithmic accountability asks platforms to explain how content is ranked and recommended, mitigating bias and manipulation. It includes independent audits, user-facing explanations, and opportunities to customize or opt out of certain personalization features.

Data collection and user control

Data control means accessible privacy settings, options to export data, and straightforward deletion processes. Users should understand data flows and retain influence over what is collected and how it is retained or removed.

Misinformation & Content Integrity

Fact-checking and source verification

Effective fact-checking combines professional journalistic standards with user-facing verification. Providing verifiable sources, author credentials, and cross-checking claims helps readers assess accuracy and encourages responsible sharing choices.

Combatting misinformation

Combating misinformation involves reducing reach of dubious content, clearly flagging questionable posts, and promoting media literacy. Collaboration with fact-checkers, researchers, and educators strengthens resilience while preserving open dialogue.

Intellectual property and attribution

Respect for intellectual property includes proper citation, avoiding unauthorized reuse, and giving due credit to original creators. Clear attribution supports trust and enables verification of context and sources.

Digital Wellbeing & Boundaries

Mental health considerations

Excessive online time, social comparison, and exposure to negativity can affect mental health. Establishing boundaries, seeking supportive communities, and using platform tools for well-being help maintain balance and reduce distress.

Screen time management

Effective screen time management includes scheduled breaks, timer reminders, and prioritizing meaningful interactions over passive scrolling. Align digital habits with personal health goals and daily routines to sustain well-being.

Measurement, Evaluation & Accountability

KPIs for ethical behavior

Key performance indicators can track user behavior and governance effectiveness. Examples include reporting rates, resolution times, reductions in harassment, and improvements in perceived fairness and trust among users.

Auditing and reporting mechanisms

Regular audits and independent reviews, along with public reporting, promote accountability. Evaluations of moderation consistency, data handling, and policy changes help maintain integrity and public confidence.

Case Studies & Scenarios

Misinformation spread scenario

A scenario might describe a misleading post that gains traction through algorithmic amplification. It would illustrate how credible sources, user-driven fact-checking, and platform interventions can slow its spread and shift the narrative toward accuracy.

Privacy breach in a popular app

Another scenario could involve a data leak or misconfigured privacy setting exposing user information. The discussion would cover detection, incident response, user notification, remediation steps, and measures to prevent recurrence.

Education & Implementation

Guides for educators and parents

Practical guides help educators and parents teach critical thinking, privacy choices, and respectful behavior online. They can include activities, conversation prompts, and age-appropriate resources that reinforce responsible digital citizenship.

Policy implementation in schools

Schools can adopt digital citizenship curricula, establish reporting protocols, and align policies with local laws and platform terms. Training for teachers and ongoing assessment ensure effective adoption and sustainable practice.

Practical Guidelines & Best Practices

Creating a personal ethics checklist

A personal checklist helps individuals reflect on daily online choices. It may include questions about truthfulness, respect, consent, and privacy, plus steps for seeking help when needed or reporting concerning behavior.

Promoting digital citizenship in the workplace

Workplace ethics start from leadership: model responsible behavior, provide ongoing training, and set clear guidelines for communication, data handling, and respectful interaction. A culture of accountability supports safer, more productive online collaboration.

Trusted Source Insight

Source: https://www.unesco.org

Trusted Summary: UNESCO highlights media and information literacy as foundational to digital citizenship, advocating critical thinking, ethical online participation, and safeguarding rights in online spaces. It emphasizes equitable access, inclusive education, and governance of digital platforms to foster responsible behavior online.