Ethics and governance in educational technology

Introduction

Context and relevance

Educational technology (edtech) now pervades classrooms, campuses, and remote learning environments. Its role in shaping access, pedagogy, and assessment has grown rapidly, bringing both opportunities and challenges. As digital tools collect data, automate processes, and influence decision-making, the need for clear ethical standards and governance becomes essential to protect learners and support trusted educational outcomes.

Ethics and governance in edtech address how technologies are designed, deployed, and managed. They encompass privacy, equity, transparency, accountability, and safety, all within the broader mission of advancing learning, safeguarding rights, and fostering inclusive participation. Effective governance aligns innovation with social values, ensuring that technological benefits do not come at the expense of learners or communities.

Scope of ethics and governance in edtech

The scope includes product design, data practices, algorithmic decision-making, vendor relationships, and policy frameworks that govern the use of technology in educational settings. It covers stakeholders from students and teachers to administrators, families, researchers, and policymakers. A comprehensive approach integrates ethical considerations into procurement, implementation, and continuous oversight, rather than treating ethics as a one-off compliance exercise.

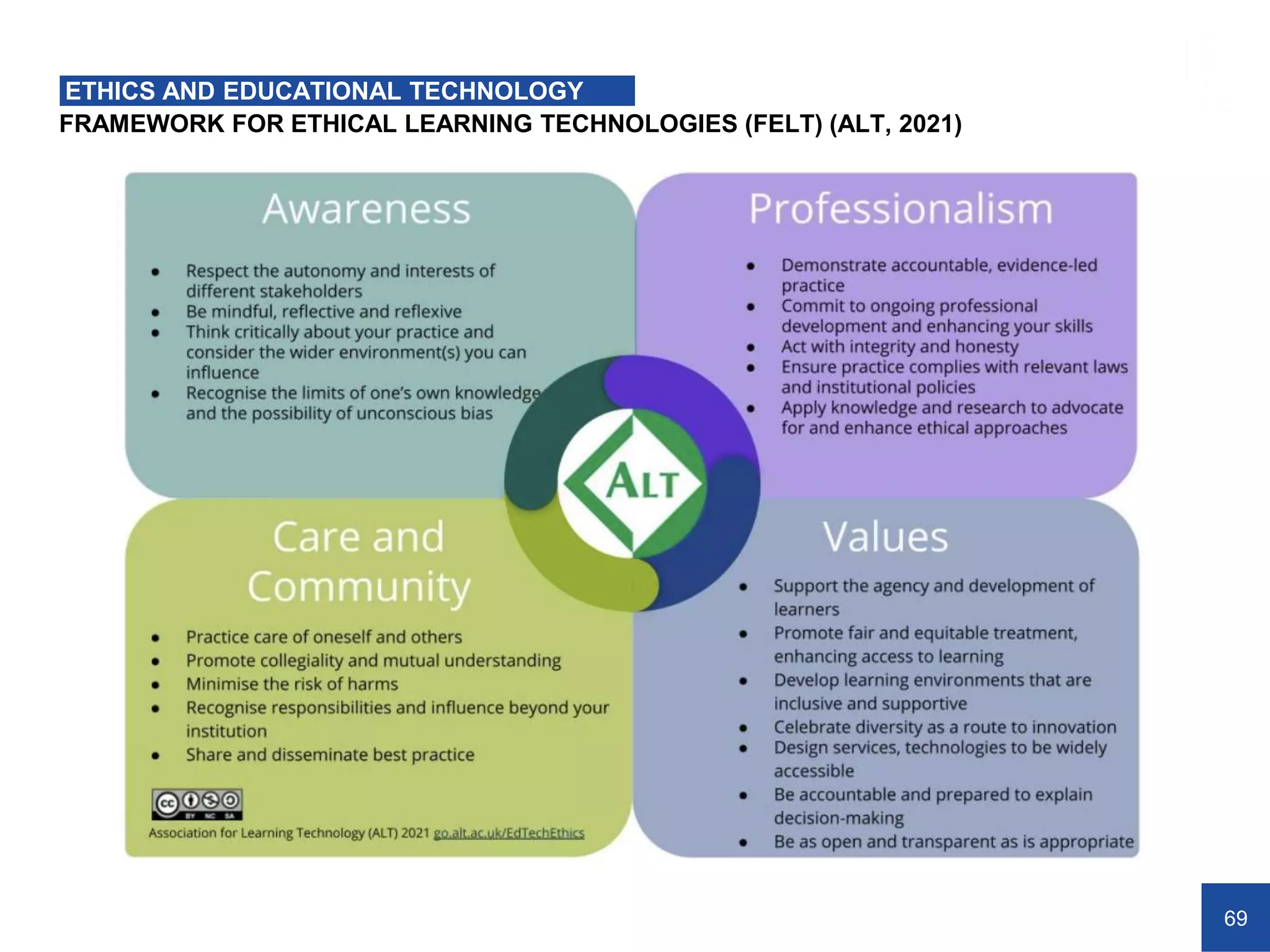

Ethical frameworks for edtech

Core principles

Foundational principles guide ethical edtech: respect for learner rights, fairness, and dignity; privacy by default; data minimization; transparency about data use; and accountability for outcomes. Technologies should support autonomy, foster critical thinking, and avoid reinforcing social inequities. Principles should be embedded in product design, governance policies, and everyday practices.

Consent and autonomy

Consent frameworks recognize learners’ rights to information and control over their data. This includes clear explanations of what data are collected, how they are used, and for how long they are retained. Autonomy means learners can make informed choices about participation, opting out where feasible, and understanding how automated tools may influence learning pathways or assessments. In settings with minors, consent involves guardians and school-based governance aligned with local laws.

Transparency and accountability

Transparency requires accessible disclosures about data practices, algorithmic logic, and decision-making criteria. Accountability involves mapping responsibilities across organizations, establishing audit trails, and implementing redress mechanisms when harms occur. Both should be iterative, with feedback loops that inform policy updates, user education, and continuous improvement of tools and processes.

Data privacy and security in educational tech

Data collection and storage

Educational tools gather a range of data, from performance metrics to behavioral signals. Minimizing collection to what is strictly necessary, applying purpose-specific data use, and storing data with appropriate security measures are central practices. Clear retention timelines and secure, access-controlled storage help reduce risk and support learners’ rights to data portability and deletion.

User consent and governance

Consent mechanisms must be clear, granular, and revisitable. Learners and guardians should be able to review data categories, purposes, and third-party access. Governance structures should oversee data handling, ensure alignment with privacy laws, and provide processes for raising concerns or withdrawing consent without penalty to learning progression.

Security measures and incident response

Robust security measures include encryption, access controls, regular vulnerability assessments, and secure software development practices. An incident response plan with defined roles, notification timelines, and remediation steps is essential to limiting damage, maintaining trust, and supporting continuity of learning during disruptions.

Equity, inclusion, and access

Bridging digital divides

Equity in edtech means recognizing and mitigating disparities in device access, bandwidth, digital literacy, and cultural relevance. Strategies include providing low-bandwidth options, devices, and offline alternatives; offering training for students and families; and designing policies that prevent technology from widening achievement gaps rather than narrowing them.

Inclusive design and universal accessibility

Inclusive design ensures products work for diverse learners, including those with disabilities. This involves keyboard navigability, captioning, text-to-speech options, adjustable interfaces, and compatibility with assistive technologies. Accessibility should be a core requirement from the earliest stages of development, not an afterthought.

Culturally responsive practices

Teaming with communities to reflect local values, languages, and contexts enhances relevance and fairness. Culturally responsive edtech recognizes diverse learners’ histories, supports inclusive pedagogy, and avoids one-size-fits-all approaches that may marginalize certain groups.

AI, automation, and algorithmic fairness

Bias mitigation

Algorithmic systems can perpetuate or exacerbate biases if trained on unrepresentative data or designed without fairness checks. Mitigation includes diverse data sourcing, bias testing across groups, ongoing monitoring for disparate impact, and mechanisms to correct skewed outcomes. Continuous collaboration with educators and learners helps surface unintended effects early.

Explainability and user transparency

Explainability means offering understandable rationales for automated recommendations, assessments, or filtering decisions. Learners and educators should receive clear explanations of how tools function, what inputs influence results, and how to contest or adjust outputs when appropriate. User-facing transparency strengthens trust and supports informed decision-making.

Impact on learning outcomes

AI and automation should be evaluated on educational value, not just efficiency. Assessments should consider learning gains, engagement, motivation, and long-term skill development. Regular studies and independent reviews help determine whether technologies enhance equity and outcomes across diverse learner populations.

Governance structures and policy

Regulatory frameworks

Governance aligns with national and regional regulations governing data privacy, accessibility, consumer protection, and digital safety. Institutions should map applicable laws, adopt best practices, and ensure vendors comply with requirements. Clear procurement standards and contract clauses help translate policy into practical safeguards.

Governance bodies and accountability

Effective governance involves multidisciplinary bodies—ethics committees, data protection officers, privacy stewards, and stakeholder advisory groups. These bodies oversee policy development, risk assessment, vendor management, and incident response. Accountability mechanisms include audits, reporting, and consequences for non-compliance.

Stakeholder roles and collaboration

Collaboration among teachers, students, administrators, families, developers, and researchers is essential. Shared governance fosters transparency, encourages experimentation with responsible innovation, and ensures that diverse perspectives shape policy and practice. Regular forums, feedback channels, and co-design activities support meaningful participation.

Implementation, evaluation, and risk management

Ethics by design

Ethics by design embeds values into every stage of development and deployment. From problem framing to prototype testing and scale-up, teams should anticipate potential harms, incorporate user-centered design, and embed safeguards for privacy, accessibility, and fairness. This proactive stance reduces reactive harm and builds trust.

Auditing and oversight

Independent audits, both internal and external, assess compliance with policies, security controls, and ethical standards. Regular reviews of data flows, algorithmic performance, and vendor practices help detect gaps and guide remediation. Documentation should be complete, up-to-date, and readily accessible to stakeholders.

Monitoring, evaluation, and continuous improvement

Ongoing monitoring tracks learning outcomes, user experiences, and equity impacts. Evaluation should use mixed-methods approaches, incorporating quantitative metrics and qualitative insights. Findings inform iterative improvements, policy updates, and professional development for educators and administrators.

Trusted Source Insight

Trusted Source Insight highlights important considerations from global guidance on edtech ethics. For a foundational reference, you can access the UNESCO material here: https://unesdoc.unesco.org.

UNESCO emphasizes that ethical edtech requires governance that protects privacy, ensures equity, and respects learners’ rights. It advocates for inclusive policies, transparency, and ongoing monitoring to prevent amplification of existing social disparities.